QA vs UAT: What’s the difference?

Discover the key differences between QA and UAT in website testing—what they mean, who runs them, and why you need both for reliable releases.

Most teams toss “QA” around like it covers every kind of testing. But QA (Quality Assurance) and UAT (User Acceptance Testing) are not the same.

QA asks: “Does it work?”

UAT asks: “Does it work for us?”

Here’s the breakdown: definitions, differences, and why you need both if you care about bug-free, business-ready websites and products.

What is QA (Quality Assurance)?

QA testing makes sure the website or product functions as designed before release.

It’s an important part of software testing.

- Who: QA engineers, testers, or developers.

- Focus: functionality, performance, compliance.

- Goal: catch defects early, meet internal quality standards, and ensure stability.

QA is the lab test: it verifies the website or product is technically sound.

QA process overview

A typical QA cycle includes:

- Analyze requirements – clarify what needs to be tested and expected outcomes.

- Plan tests – create a test plan that decides scope, coverage, and timing.

- Design test cases – write detailed scripts for functionality and edge cases.

- Execute tests – run manual and automated checks.

- Retest and regression – verify fixes and ensure nothing else broke.

- Report results – the QA team documents findings for developers and stakeholders.

Example: Testing a login flow might involve writing cases for valid logins, invalid passwords, rate limiting, and multi-device behavior.

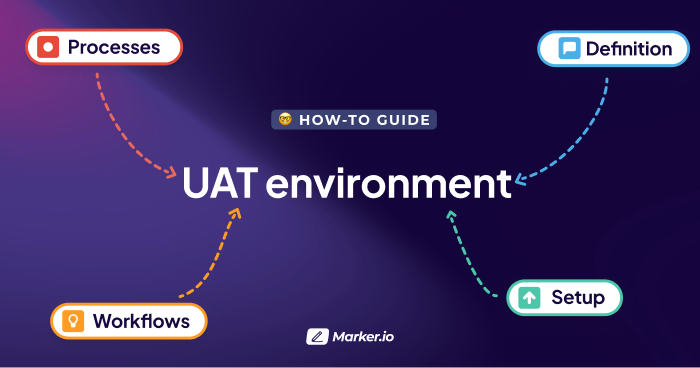

What is UAT (User Acceptance Testing)?

UAT testing validates that the website or product meets business goals and delivers the right user experience.

- Who: end-users, stakeholders, clients, or business teams.

- Focus: usability, workflows, business requirements.

- Goal: confirm the website or product solves the right problems in the right way.

UAT is the field test: real users give the final thumbs-up.

UAT process overview

A typical UAT testing process looks like this:

- Prepare environment – set up a staging site mirroring production.

- Define scenarios – map workflows against business requirements.

- Execute tests – stakeholders walk through end-to-end flows.

- Log issues – capture gaps, usability problems, or missing requirements.

- Retest and sign-off – fixes are validated; stakeholders approve release.

Example: In an e‑commerce UAT, a business rep might add items to a cart, apply discounts, attempt invalid payments, and complete checkout to confirm real-world readiness.

QA vs UAT: Key differences

In short: QA = technical verification. UAT = business validation.

Why people confuse QA and UAT

The overlap is real:

- Both happen pre-launch.

- Both aim to prevent production bugs.

- Many companies use “QA” as shorthand for all testing.

The danger, of course, is when people believe that a technically perfect website or product will work exactly as end-users expect.

Why both QA and UAT matter

- QA without UAT: Stable code, but workflows may not fit business needs.

- UAT without QA: User sign-off, but hidden regressions or performance issues.

Example: QA catches a memory leak that crashes the site under load. UAT confirms the checkout flow aligns with finance team reporting.

Some teams treat UAT like an internal beta testing stage, gathering feedback before full release.

Together, QA and UAT guarantee your website or product is both technically reliable and user-ready.

Test cases, scenarios, and environments in QA vs UAT

QA and UAT both rely on test cases, but their focus differs:

- QA test cases: Detailed scripts that check functionality, integrations, and edge cases. Run in controlled testing environments that mimic production. Cover unit, regression, performance, and integration testing.

- UAT test scenarios: High-level workflows that reflect real business use. Run in a staging environment close to production. Cover end-to-end journeys and business-critical flows.

Example: QA test case = “Verify login form locks after three failed attempts.” UAT scenario = “Sales rep completes an entire order from cart to invoice.”

Methodology differences in QA vs UAT

- QA methodology: Typically follows software development process methodologies (Agile sprints, DevOps CI/CD pipelines, automated regression suites). Focus on continuous testing throughout the SDLC (software development lifecycle).

- UAT methodology: Structured around project milestones, business requirements, and stakeholder sign-off. Often managed with checklists and acceptance criteria tied to user stories.

In practice, QA aligns with the development team’s processes, while UAT aligns with project management and stakeholder approval.

Tools that make QA and UAT easier

Bug reporting is where both camps struggle:

- QA engineers want structured tickets with logs and environment data.

- UAT stakeholders just want to point and say, “This doesn’t work.”

That’s where Marker.io helps:

- QA testers send annotated screenshots, console logs, and metadata directly into Jira, Trello, Asana, or another PM tool.

- UAT stakeholders use the same widget to click, comment, and submit feedback without touching technical fields.

- All reports funnel into one system, so developers never lose context.

No screenshots over email and no missing details: just clean, standardized bug reports.

Final thoughts

As a recap:

- QA ensures the website/product works.

- UAT ensures the website/product works for its users.

Skip one, and you risk either broken code or broken workflows. Use both, and you launch with confidence, delivering high-quality software every time.

Want to make the hand-off seamless? Try tools like Marker.io to keep QA and UAT feedback flowing in one place.

What should I do now?

Here are three ways you can continue your journey towards delivering bug-free websites:

Check out Marker.io and its features in action.

Read Next-Gen QA: How Companies Can Save Up To $125,000 A Year by adopting better bug reporting and resolution practices (no e-mail required).

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things QA testing, software development, bug resolution, and more.

Frequently Asked Questions

What is Marker.io?

Who is Marker.io for?

Marker.io is for teams responsible for shipping and maintaining websites who need a simple way to collect visual feedback and turn it into actionable tasks.

It’s used by:

- Organizations managing complex or multi-site websites

- Agencies collaborating with clients

- Product, web, and QA teams inside companies

Whether you’re building, testing, or running a live site, Marker.io helps teams collect feedback without slowing people down or breaking existing workflows.

How easy is it to set up?

Embed a few lines of code on your website and start collecting client feedback with screenshots, annotations & advanced technical meta-data! We also have a no-code WordPress plugin and a browser extension.

Will Marker.io slow down my website?

No, it won't.

The Marker.io script is engineered to run entirely in the background and should never cause your site to perform slowly.

Do clients need an account to send feedback?

No, anyone can submit feedback and send comments without an account.

How much does it cost?

Plans start as low as $39 per month. Each plan comes with a 15-day free trial. For more information, check out the pricing page.

Get started now

Free 15-day trial • No credit card required • Cancel anytime